Two algorithms for the instantiation of structures of musical objects

Bernard Bel

This is an extended and revised version of the chapter: Symbolic and Sonic Representations of Sound-Object Structures published in M. Balaban, K. Ebcioglu & O. Laske (Eds.) “Understanding Music with AI: Perspectives on Music Cognition”, AAAI Press (1992, p. 64-109).

Abstract

A representational model of discrete structures of musical objects at the symbolic and sonological levels is introduced. This model is being used to design computer tools for rule-based musical composition, where the low-level musical objects are not notes, but “sound-objects”, i.e. arbitrary sequences of messages sent to a real-time digital sound processor.

“Polymetric expressions” are string representations of concurrent processes that can be easily handled by formal grammars. These expressions may not contain all the information needed to synchronise the whole structure of sound-objects, i.e. to determine their strict order in (symbolic) time. In response to this, the notion of “symbolic tempo” is introduced: the ordering of all objects in a structure is possible once their symbolic tempos are known. Rules for assigning symbolic tempos to objects are therefore proposed. These form the basis of an algorithm for interpreting incomplete polymetric expressions. The relevant features of this interpretation are commented.

An example is given to illustrate the advantage of using (incomplete) polymetric representations instead of conventional music notation or event tables when the complete description of the musical piece and/or its variants requires difficult calculations of durations.

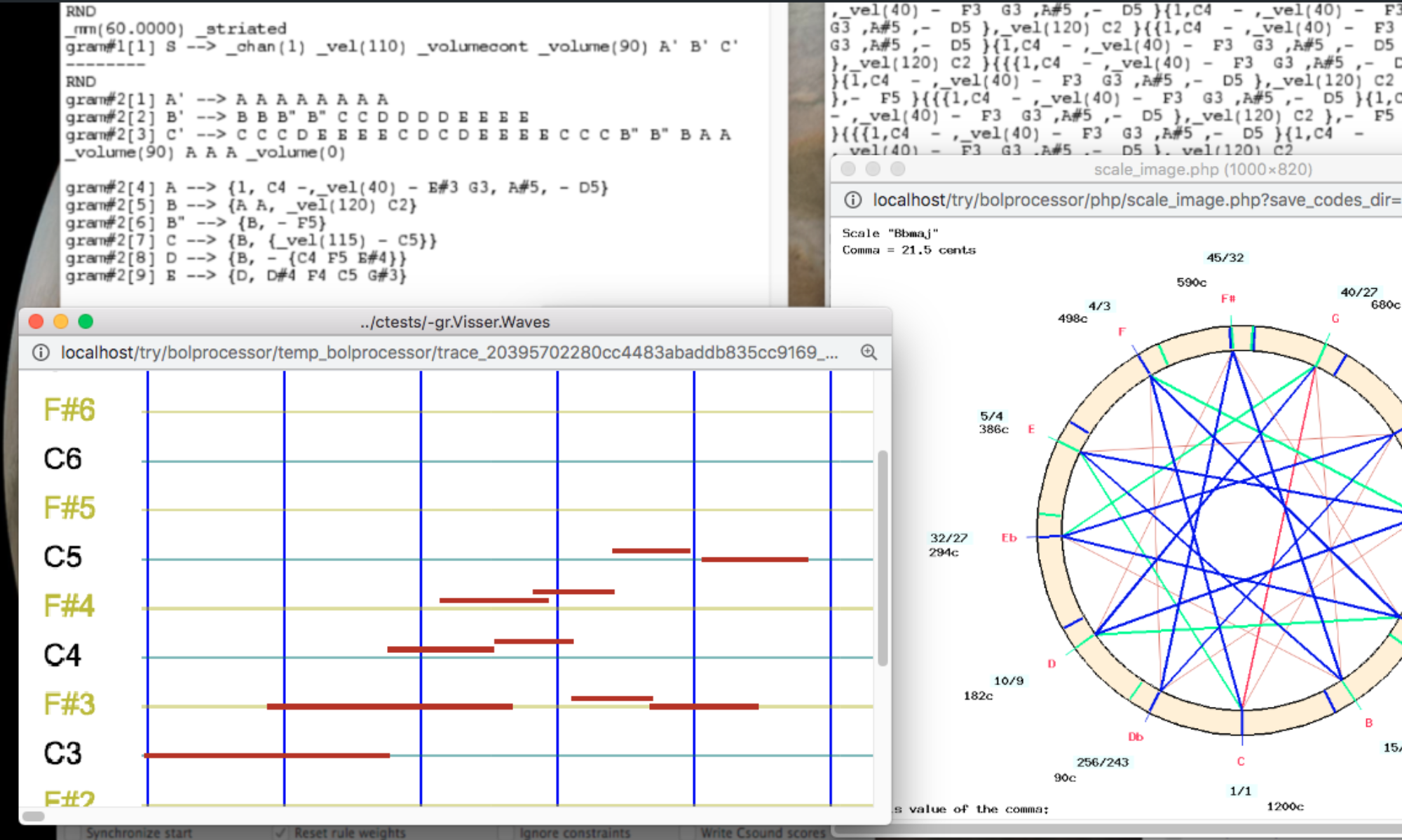

Given a strict ordering of sound-objects, summarised in a "phase table" representing the complete polymetric expression, the next step is to compute the times at which messages should be sent. This requires a description of "sound-object prototypes" with their metric/topological properties and various parameters related to musical performance (e.g. "smooth" or "striated" time, tempo, etc.). These properties are discussed in detail, and a time-polynomial constraint satisfaction algorithm for the time-setting of sound objects in a polymetric structure is introduced. Typical examples computed by this algorithm are shown and discussed.

Excerpts of an AI review of this paper (Academia, June 2025)

Summary of the Work

The manuscript introduces a model for representing and instantiating musical structures made of discrete sound-objects. It explores separate representations for symbolic and physical time, then proposes methods to synchronize concurrent musical processes and to compute the precise scheduling of messages (or events) destined for a sound processor. The author conceptualizes “smooth” and “striated” time, describes “polymetric expressions” as high-level objects that can be incomplete, and provides algorithms to infer missing timing details. The paper merges formal language approaches, real-time considerations, and practical examples of usage in a software environment called Bol Processor.

Comprehensive Model of Time

- The clear distinction between symbolic time (strictly ordered events) and physical time (metronomic or arbitrary “striated” structures) provides an insightful way to handle simultaneous or overlapping musical events.

- The notion of “smooth” versus “striated” time is conjugated with helpful examples, making it straightforward to envision both contexts of performance.

Polymetric Expressions and Incomplete Descriptions

- The approach to polymetric expressions accommodates incomplete representations that can later be resolved by an algorithmic interpretation. This is particularly valuable for composers and researchers looking to experiment with generative or rule-based systems without being forced into fully specified notation from the outset.

- The examples and diagrams showing how one can map polymetric structures to a phase diagram illustrate the concept effectively.

Synchronization and Constraints

- The proposed synchronization algorithm handles concurrency by carefully propagating symbolic tempos across sequences. This helps manage cases where direct numeric durations are not known beforehand.

- The introduction of properties such as pivot alignment (PivBeg, PivCent, etc.), truncation, elasticity, and relocatability demonstrates thorough consideration of realistic performance scenarios (e.g., rubato, partial overlaps).

Practical Implementation and Examples

- The paper gives a thorough demonstration of how these algorithms integrate with a functional software environment, including tangible data structures (phase tables, instance tables) and step-by-step flowcharts.

- Musical examples and references to real-time computer music systems underscore the applicability of the approach.

Rich Technical Detail

- The stepwise pseudo-code for the time-setting algorithm is extensive and transparent. This level of detail can guide further experimental or production-level implementations.

- The complexity analysis (O(nmax² · imax³) in the worst case) provides an understanding of potential computational limits, helpful for anyone planning to use or extend these algorithms.

Potential Impact

- This work is useful to composers, researchers in algorithmic composition, and developers of music software who need robust synchronization methods that go beyond simple “note-by-note” scheduling.

- The proposed methods can enable more flexible representations of time and concurrency, allowing for richer generative or improvisational projects.

Summary of Review

Overall, this paper provides a detailed, formalized framework for resolving concurrent musical structures. It bridges the gap between abstract symbolic grammars and practical real-time performance considerations. Researchers and musicians interested in algorithmic composing or advanced computer-assisted composition tools will likely find its approach compelling, especially given the extensive examples and robust pseudo-code.

Download this paper (PDF)